AI-Native Systems Are The Default

A few weeks ago, I published Fintech x AI: The Next Great Intersection, the first of a two part segment, wherein I shared more about the opportunities we are currently observing at the intersection of Fintech x AI. I covered emergent business models, the types of products taking shape and a framework for why AI and money must interact.

In this segment, I cover more on the AI native systems we are seeing our portfolio companies put in place to compete. This has far less to do with Fintech (if at all) and far more to do with the realities of the operating environment today, its impact and implied dislocation all thanks to AI. I hope this will serve as a guidepost for what it takes to survive in today’s environment, let alone compete.

AI Changes Everything

In 2023, the discourse centered around whether incumbents had the advantage with AI thanks to existing distribution or the startups had the advantage thanks to speed and agility.

In 2024/25, the discourse seemed to navigate towards the increased competition amongst seemingly alike startups with me-too products all vying for attention via branding and loud PR.

The former makes the classic and consistent argument about distribution vs innovation while the latter makes the current argument for how one ought to seek new forms of distribution in a loud and noisy world.

I think both miss the point. AI is not a feature that gets bolted onto a product and therefore the argument in favor of existent distribution does not typically hold true. Similarly, consumption, application and leverage of AI is not consistent across teams which means that no two startups, even with a superficially identical feature set, are ever the same.

AI is a new system of operating and because of it, the table stakes for startups and incumbents to compete have increased tenfold. Success for startups has always been about endurance and compounding, both a result of superior systems. It has always been about customer centricity, velocity and frontier tech as the tool to build products that will matter, also results of superior systems. But thanks to AI, the operating systems required to harness it effectively in a way that transcends internal operations to product delivery and customer experience have changed. How startups adapt and orient around this reality serves as an important litmus test for staying power, endurance and customer centered personalization.

I won’t try to feign expertise to offer the winning formula for implementing AI-native systems in your company. I will however share our observations on how some of our own portfolio companies have adapted themselves into AI-native operating environments. I will also state that we strongly believe these are now necessary primitives to exist and compete. While winning might be in part a derivative of these systems, winning itself always requires a whole lot more.

- A System For Velocity, Not Speed

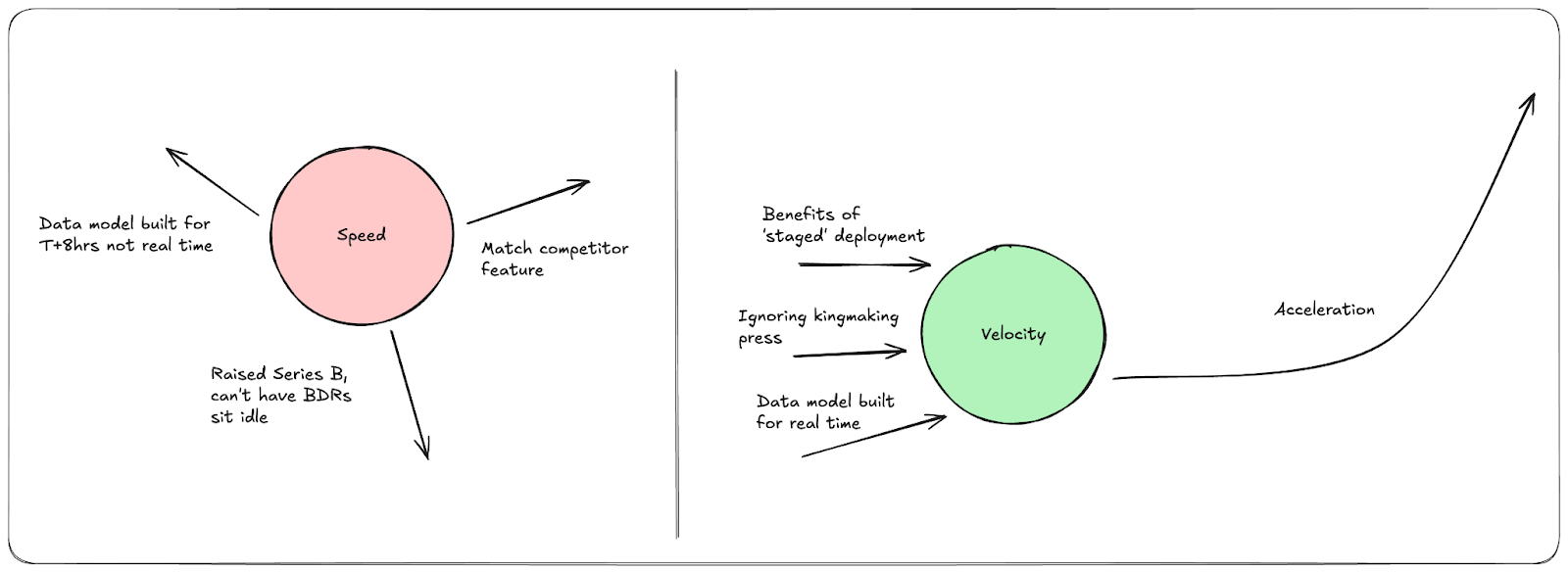

Direction matters. Moving at high speeds in just two, let alone ten directions likely leaves you in the same place. Moving at high speeds in a unified direction leads to acceleration. This matters.

Direction is not only about product direction. It’s about every member of the team’s attention, context and priorities at any given moment in time. When they are in alignment, great things happen. When they are not, nothing happens.

With AI code generation, the pace and direction a team can ship a product is orders of magnitude higher. What used to take weeks and months can now take days. But if left unconstrained, this also means larger bloated codebases, distractive feature chasing and increasingly large mountains of technical debt. And generally a bunch of AI slop.

Clarity of vision matters. Yes clarity of product vision, but that's not all of it. Clarity in data schema matters. Hard and deliberate tradeoffs to ensure platform composability matters. Exceptional code and product design, sometimes at the expense of nominal urgency matters.

For the first time in close to a decade, teams can make hard technical design choices that are hard for lookalike companies to replicate and these have significant downstream effects on product quality and scalability. This requires clarity and clarity is direction.

In the crowded space of Revenue Operations, Monk often takes the harder technical path and in a world where the most capitalized seizes attention, Monk is betting that true automation and scalability wins.

- Context Rails

For AI, context matters. Companies today have a variety of internal and external surface areas of context. Think internal comms, code bases, product touch points and even data partnerships. The context from any of these surface areas proliferates in real time and much like AI code generation, if left unconstrained, can't really be very effective.

Not all context is created equal. AI leverage is only amplified when context is structured, precise, accessible and smart. The rails that companies build to capture, index and store context have never been more important. And as time in market for a product increases, the moats derived from mature and growing context rails are hard to outcompete.

Basis dedicates an internal team called Atlas solely to this effort. The Atlas team’s sole priority is to ensure that any Basis employee, internal agent or customer facing agent can leverage AI for any task and AI knows exactly where to retrieve the right context for that specific ask. Outpost has a combination of tax experts on staff and real time observability of global tax laws to build a knowledge base that AI can utilize for accurate tax calculation right at the point of sale.

- Deploying Intelligence

Palantir created the modern day Forward Deployed Engineering (FDE) archetype. But really, on-site deployment, solutions engineers, project consultants have all been roles that largely served to ensure a large contract customer saw a successful implementation, just like FDEs do.

Every AI company today wants to hire FDEs and I don’t blame them. Orchestrating your product and AI’s full effect on the customer side is not easy and it’s certainly not self-serve. The need to parachute in, stitch together data silos, coordinate across stakeholder workflows and incentivize human adoption and behavior change is real and challenging.

But teams that approach this as a staffing and sales problem will likely falter or at the very least aren’t doing anything different from SaaS waves prior. Diffusion of AI is not a technical and GTM problem as much as it is a behavioral change problem with massive societal impact (and barriers). Teams that treat this as an extension of their product experience and an additional surface area for context gathering, behavioral change and monetization will persist.

Basis, Inscope and Meroka place thoughtful time and investment into deployment initiatives that are not only technical but also emotionally oriented, like training workshops and co-creation opportunities for new products to be co-sold.

Founding Partner at @friendsfamcap Colin Anderson on forward-deployed engineering; when it works and when it doesn’t.

— TBPN (@tbpn) November 9, 2025

“The FD model is great in certain markets, terrible in others… you’ve got to be solving a hard enough problem.”

“A world-class engineer plus their equity… pic.twitter.com/dSf4oOLrcb

- Fine Tuning

Foundation models are getting cheaper and smarter every day. At the same time foundation model companies are creeping further into the app layer. I think there is plenty of the app layer that OpenAI, Anthropic and others will own. The question I often think about is what are the right applications for general intelligence to excel in and what applications are big enough for general intelligence to want to adapt into. Clarity here leads me to a more important thought (as an investor) which is, specialized intelligence. Specifically, in what areas of the world will specialized intelligence be a necessary condition to deliver quality products and experiences. Specialized intelligence can result in app layer companies or other forms such as Full-stack AI businesses.

Specialized Intelligence is an output of highly contextual data found through extreme customer centricity and proximity to the tasks and jobs to be done in the analog world, or between the analog and digital world.

The teams that are able to productize this into software are able to orchestrate and fine tune for layers of deterministic and specific workflows. And what the product ends up looking like under the hood is a beautiful snowflake or network of applied finely tuned models to very atomic and focused tasks and jobs to be done. This isolates outcomes, context and accuracy in a manner where the collective output is hard to replicate or compete with, a legitimate product moat against foundation model scope creep.

Docshield and Blaise have built entire middle and back office workflows found at insurance brokerages and carriers but now operated through agents. Hundreds of specific and isolated tasks perfectly working in tandem to simulate the operations of a traditionally human dependent org.

Closing Thoughts

AI-native systems are the new default to survive. Teams that are thoughtful and considered in their approach are also utilizing some of these systems as competitive advantages for product, operating leverage or simply new ways to monetize. The cost of not adapting to these is at the very least, akin to the cost of discounting cloud nativity in the previous decade. And thanks to these, short term parity amongst startups means nothing when the compounding effects of velocity, context gravity and difficult technical tradeoffs over the long term will be enormous.